Motor, home and health insurers have never had more access to data about their customers. Thanks to the internet of things (IoT) there is a growing ability to assess how we drive, live our lives, protect our assets and price us according to our own individual risk profile. There is also an opportunity to offer feedback and incentives to insurance customers to encourage better behaviour, thereby reducing claims and enabling insurers to offer discounted rates to less risky customers.

However, by sharing this information about ourselves, could some consumers be penalised for things that are beyond their control, their age for instance, genetic predisposition to disease or even personality? Thanks to the European Union’s Gender Directive in 2012, insurers can no longer charge men and women a different price, even if this is based on actuarially sound analytics. But there are many other personal attributes that could be used to determine what individuals are charged for their insurance.

When Admiral was forced to withdraw its plans to partner with Facebook late last year after the social media company said the scheme would breach its privacy rules, it raised an important moral question. Just because an insurance company has the ability to analyse customers’ use of social media and to use that information as a rating factor doesn’t necessarily mean they should.

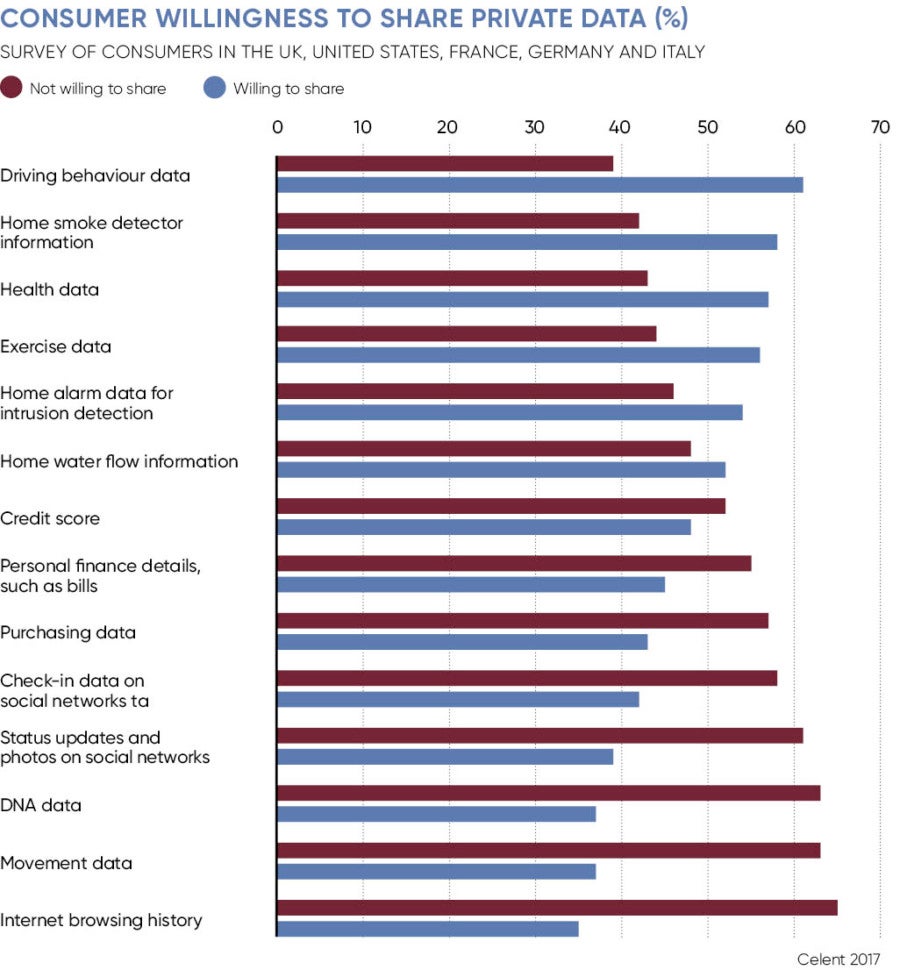

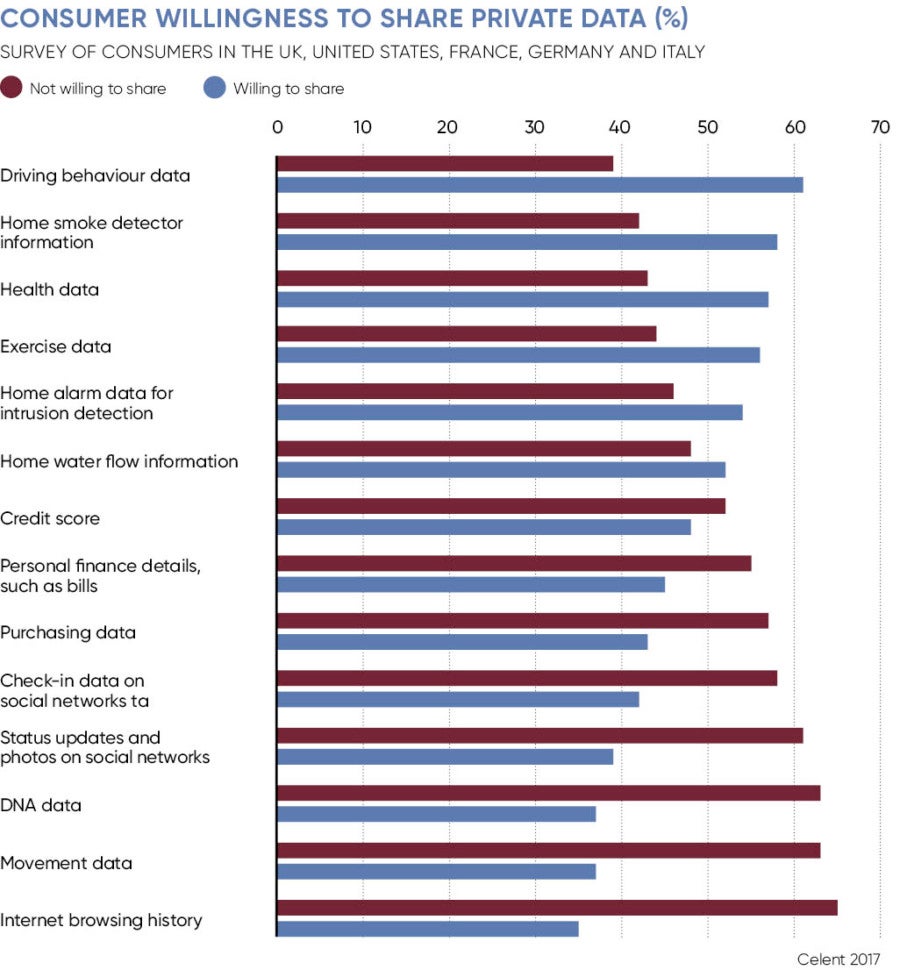

“There are ethical issues here and a need for strong regulation in place,” says Nicolas Michellod, a senior analyst in Celent’s insurance practice. “We carried out some research last year asking consumers what they think about insurance companies using their data on social networks to provide new products or to track them for claims fraud. It’s clear there’s a big gap between what insurance companies think they should be allowed to do and what the consumers think.”

As a society we shouldn’t be looking to exploit people who are naive and vulnerable

Data has always been a commodity in the insurance business, allowing underwriters to assess and price risk accurately, from motor insurance through to major commercial operations. But as insurers mine data from an increasingly vast range of sources, accelerating the need for artificial intelligence to create meaning from it, they will inevitably gain access to more and more information about their customers.

Regulators are watching carefully as insurers tap consumer data. While the Financial Conduct Authority took the decision to drop its probe into insurers’ use of big data last year, acknowledging that it did not want to hinder industry innovation and noting the use of information about consumer behaviour was “broadly positive”, it also noted there could be “some risks to consumer outcomes” with some individuals finding it harder to access affordable cover.

In personal lives, the connected car and home, and wearable devices promise increasingly greater levels of insight into a customer’s lifestyle, behaviour and circumstances. However, their insurance companies should use this information responsibly, says Andrew Brem, chief digital officer at Aviva, particularly as pricing becomes more tailored to each individual rather than pooled across the entire marketplace.

“Social, public, IoT, genetic and other new forms of data might indeed reveal much greater variance in risk that we can measure today, and the natural implication would be greater extremes of pricing,” he explains. “This could raise significant questions about fairness in society, and the insurance industry will need to work with governments and regulators to agree what factors society feels we should, and should not, take into account when pricing risk.

“This is a rapidly evolving area and we continually challenge ourselves as to whether our customers would consider use of these data sources acceptable. Insurance has a social role to play in helping those in danger of falling out from insurance, so we’ll need to look at solutions for everyone.”

Telematics insurance products are hailed as one example of the industry innovating and leveraging data to cater to the needs of a group of disadvantaged consumers. In return for having their driving behaviour monitored, younger drivers are able to access more affordable motor insurance.

For 18 to 20 year olds, who pay an average of £972 a year for their cover, compared with an average of £367 for other drivers, according to the RAC, this can make all the difference. Sixty two per cent of young drivers see insurance as the biggest barrier to owning and running a car.

Not only does telematics provide young drivers with access to more affordable cover, it is a popular example of how a feedback loop can improve the underlying risk. “Our insurer clients find that only a tiny fraction of customers – typically less than 3 per cent – don’t respond to feedback on their driving behaviour,” says Selim Cavanagh, managing director of Wunelli and vice-president of LexisNexis. “So there’s a massive societal benefit, and it also helps the individual pay less and still be mobile. Feedback is a really powerful tool.

“With telematics, younger drivers pay around 41 per cent less for their insurance because telematics tells them they are being monitored, and most young people are willing to listen to feedback and modify their behaviour.”

There are clearly benefits to be gained at all levels when consumers opt to share their personal information with insurers and when this information is used to reduce risky behaviour. However, while certain rating factors, such as driving style, are within customers’ control, there is very little that can be done to alter or improve other factors insurers could use to price cover.

In the United States, there has been a backlash against biometric screening as part of corporate wellness programmes. Certainly there is a feeling that it is morally wrong to deny health insurance to individuals because of their predisposition to certain diseases. “Big data and the IoT can be used by the insurance industry as a force for good or a force for bad,” says Mark Williamson, a partner at law firm Clyde & Co. “It’s about having the right checks and balances in place.

“One of the big concerns is a moral argument around using uncontrollable rating factors. Should an individual be penalised for their genetic make-up when there’s nothing they can do about it? These uncontrollable factors might be things individuals are not happy to share or that they may not even know about themselves. Is that morally correct?”

He thinks these issues are now a matter of public policy, with the onus on the industry, governments and regulators to keep up with developments. It is anticipated the EU’s General Data Protection Regulation could be instrumental in determining how insurers should and shouldn’t be allowed to use customers’ data moving forward.

“As a society we shouldn’t be looking to exploit people who are naive and vulnerable,” Mr Williamson concludes. “And if by drilling down to an individual level, insurers are discriminating against these individuals, is it right for a sector founded on the concept of pooling of individual risks to be doing that or is the government and regulator going to have to do something about that?”