Companies are becoming increasingly aware of unconscious bias in the hiring process, yet so many recruitment programmes still fail.

One way of overcoming biases ingrained into the recruitment process is though implementing an algorithm into the hiring process, but is a computer really the best at identifying talent?

What is an algorithm?

The Oxford Dictionary defines it as a process or set of rules to be followed in calculations or other problem-solving operations, especially by a computer. For example, an employer can use an algorithm to select applicants with a first-class degree classification or if they are looking for a particular degree in a graduate intake.

Algorithms are essentially instructions put into a code, explains Lydia Nicholas, senior researcher at Nesta. “Algorithms are rules behind any single field that you put in. You can automate this process. For example, you can assign different scores for which university a candidate went to and add scores at every stage of the process. Did they do the right degree? You can use algorithms to recognise the bias or enhance the existing bias, but the algorithm is only a function of what an employer wants in a system.”

Traditionally, many large corporates have used algorithms to sift through huge swathes of online applications in a bid to secure the perfect candidate for the role and to make the process as objective as possible. However, in the last year or two, large corporates have started to use algorithms to recruit beyond the pool of candidates produced by the Russell Group of universities in a bid to improve their diversity profile.

Diverse workforce

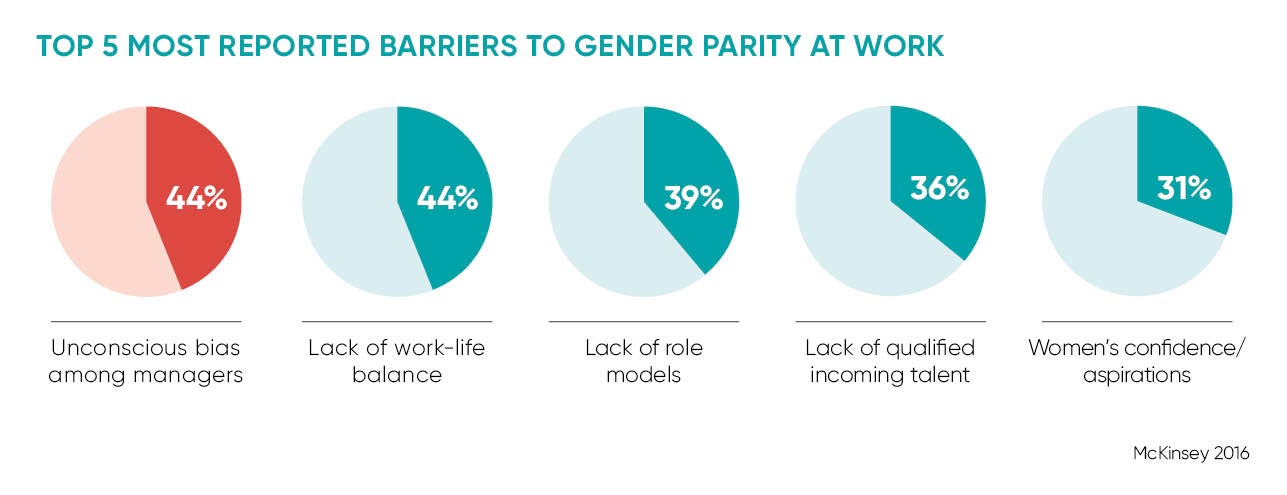

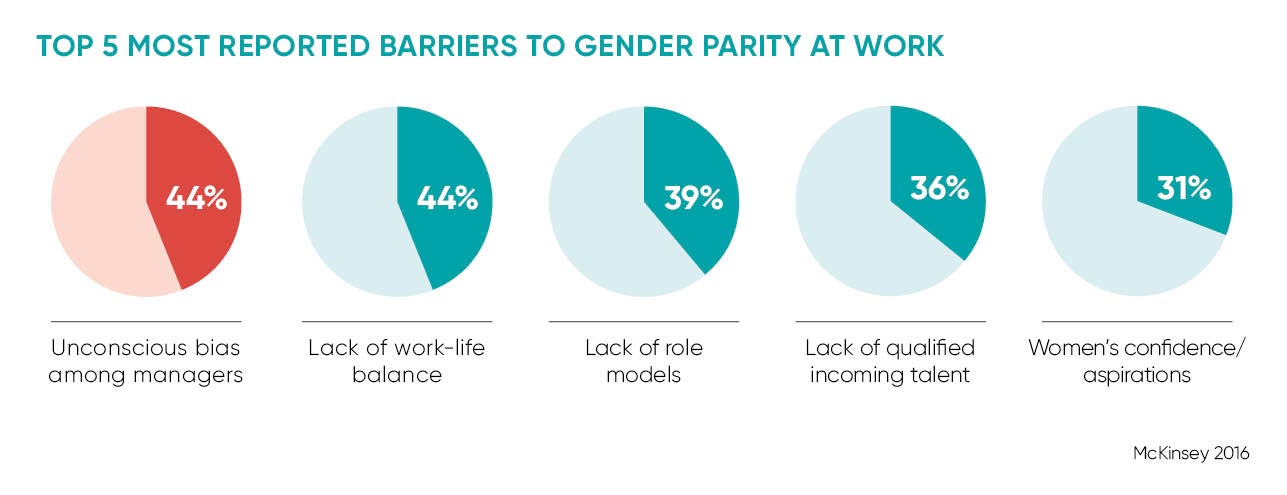

Unconscious bias can creep in at several points during the selection and recruitment stage, warns James Meachin, head of assessment at business psychologists Pearn Kandola. “The biggest risk areas are where there is limited accountability for decision-making and there is less motivation for them to think very carefully about why they are making a decision. For example, a person in HR or an outsourced agency who is screening large volumes of CVs.

“Another area is where there is less structure in decision-making and greater opportunity for people to influence each other. For example, assessment centres commonly have ‘wash-up’ discussions where the assessors gather at the end of the centre to review candidates’ performance. Research shows that these conversations are when assessors are tired and there is time pressure to introduce generalised views.”

Algorithms have a place because we know that when employers are left to their own devices, they are capable of conscious or unconscious bias, says Mr Meachin. “Algorithms give us an objective measurement for what we think we’re measuring. An algorithm just guarantees that you’re measuring something in an objective way.”

Automated processes such as algorithms are most commonly used early on in the screening process, explains Mr Meachin. “For example, in automatically scoring aspects of application forms and for objective tests such as reasoning tests and situational judgment tests. They’re also used in combining scores at interviews and assessment centres to form an overall ‘index’ of ability.”

Patrick Voss, managing director at Jeito, argues that algorithms can be used to provide a richer and more balanced set of information about potential candidates. “In particular, for roles that may require specific skill sets, for example telesales, algorithms can help filter those less likely to stay in the role for longer,” he says.

The flaws

Mr Voss adds that algorithms can save time in filtering candidates to give a shortlist, but cautioned they will only filter based on the data and factors provided and which they have been programmed to filter for.

Mr Meachin is cautious about solely relying on algorithms to remove bias from the selection and recruitment process. He says: “Built-in algorithms in a graduate-screening process might delay the bias and improve diversity, and while that is good to have, what organisations aren’t doing as much is dealing with the people aspect of the bias. For example, how can an organisation ensure that its recruiters are well trained to minimise the bias?”

Algorithms give us an objective measurement for what we think we’re measuring

However, it’s a mistake to say computers can identify talent, says Chris Dewberry, senior lecturer in organisational psychology at Birkbeck, University of London. “Computers can be programmed to collect some data relevant to selection. But since it’s humans who decide which tests to use and humans who decide how to combine responses to items to obtain total scores, it’s ultimately humans who are deciding who is selected, not computers,” says Dr Dewberry.

For the foreseeable future, it won’t be possible to take people out of the decision-making process, Mr Meachin concludes. “Automated processes aren’t sophisticated enough to make fine-grain judgments about abilities such as influencing skills. Even if we get to the stage where computers could replace people in making selection decisions, it’s unlikely that hiring managers will want to relinquish control.”

What is an algorithm?