At the end of a year pockmarked by an unprecedented number of cyberattacks, it is clear employees and users are often targeted by hackers to gain access into an organisation.

The evidence points to the reality that people are fallible. However, all is not lost, according to Dr Anton Grashion, Cylance’s senior director of product and marketing for Europe, the Middle East and Africa. “As an industry we have failed to protect people from attackers and the onus is on us to prevent harm before it can even begin to have a negative effect on individuals or businesses,” he says.

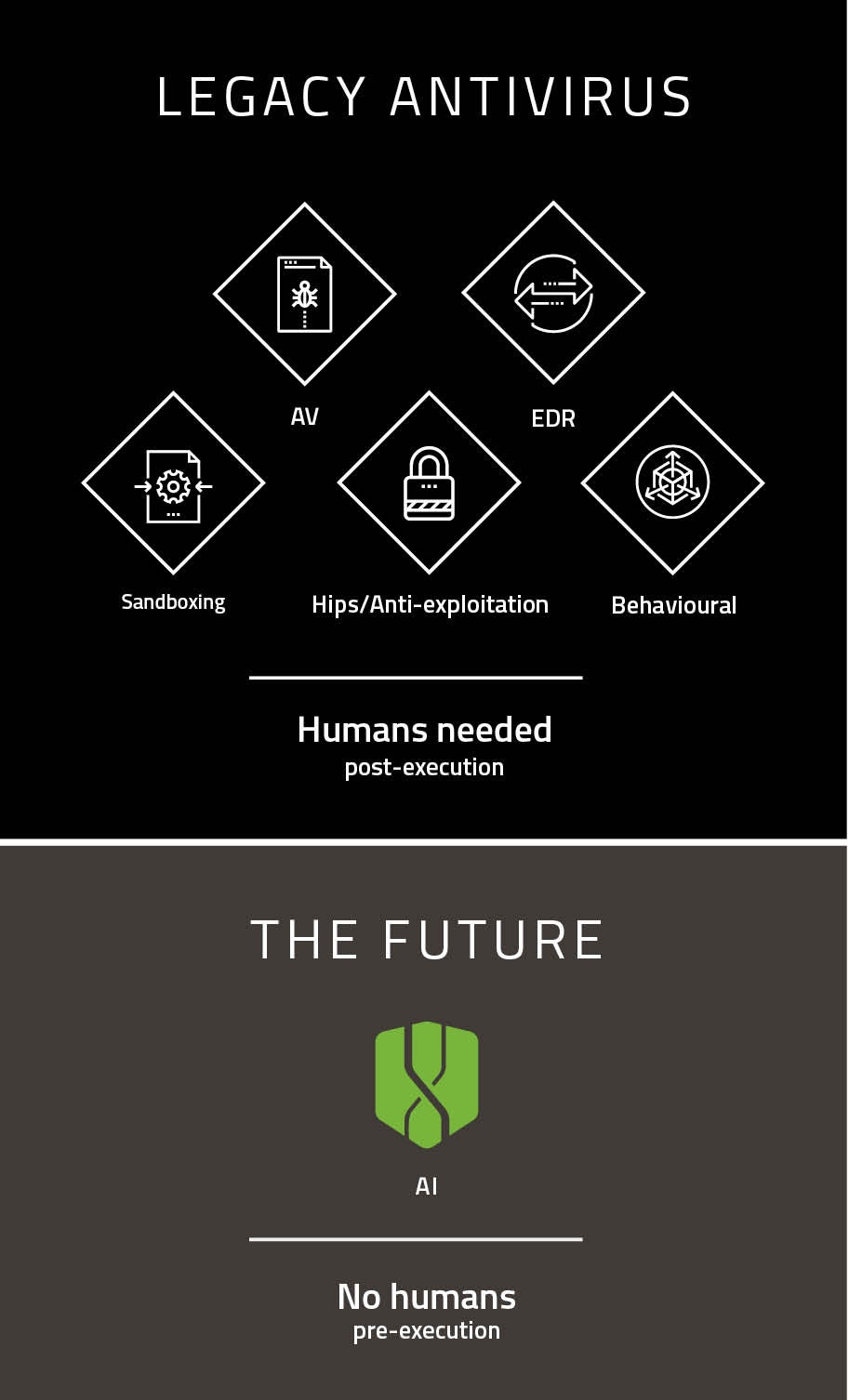

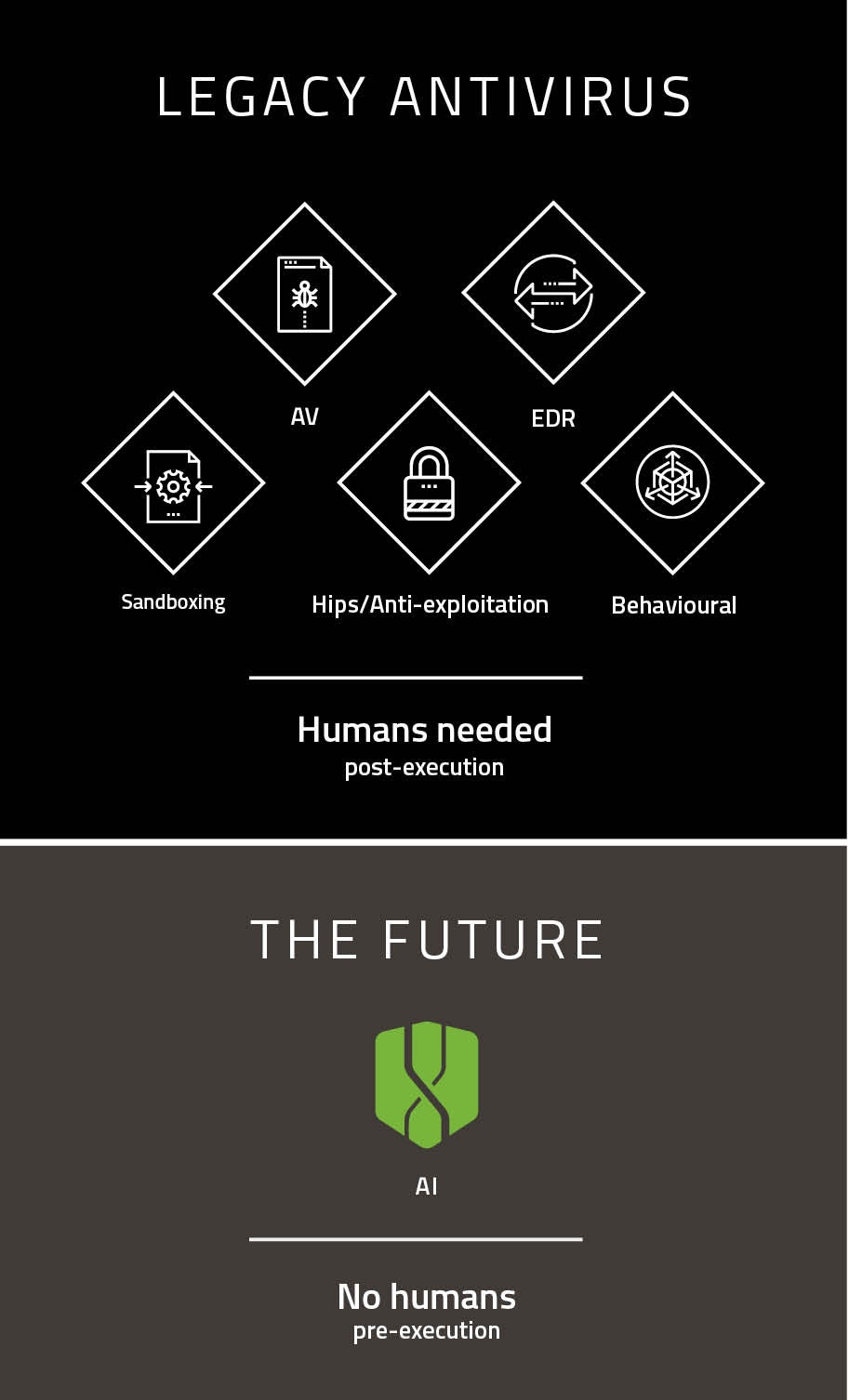

Dr Grashion, whose company vows “to protect every end-point under the sun”, believes the solution to this mushrooming issue is to combine human effort with machine speed and accuracy. He says: “With artificial intelligence (AI) doing the bulk of the work of pinpointing suspicious malware or activity, humans can then focus on a smaller group of suspicious files or behaviour to determine what is potentially dangerous.

“This way people are no longer faced with an insurmountable amount of data that we can’t process quickly enough to be effective. Ultimately, we want to give people time back to be more productive.”

Recent technological advancements, specifically in AI, now enable us to prevent 99.8 per cent of attacks before they happen. Dr Grashion says: “Another benefit of utilising machine-learning and AI is that they allow us to remove clunky and intrusive security controls, and use more intelligent, lightweight and effective countermeasures against cybercriminals.

“We humans are hardwired to trust other people and that is precisely why social engineering works for hackers. That is not going to change any time soon, so to boost cyberdefences you have to help people make the right decisions or, even better, avoid having to make a decision, which is only possible if your systems are intelligent enough to support this approach.

“We don’t depend on normal people, outside of your security and IT teams, to become cyberexperts. Frankly, it’s unfair of us to ask it of them. The landscape is constantly changing and cyberattacks are evolving, so how do you keep pace?

“Even old, known malware continues to cause brand damage, breaches and financial losses. How do you ensure you are covered from new or evolving attacks further down the line when we can’t even seem to gain a handle on threats that were identified five years ago?”

To explain the current approach towards cybersecurity, Dr Grashion uses the analogy of a rowing boat with a large hole in the bottom, taking on water. “The first thing to do is reduce the flow of water into the boat,” he says. “You don’t want to have to get a bigger bucket to try and bail the water out, and that is where we are today.

The more advanced cyberattacks become, the more we will have to rely on AI – people alone don’t stand a chance

“At present we are reactionary, springing to action only after the attacks have breached our systems and compromised the data we have tried to protect. Over the past five years, the balance from prevention to detection and response have been disproportionate. The prevailing thought has been that 100 per cent prevention is not possible, therefore you must invest more on detecting breaches and respond accordingly.

“But, returning to the boat analogy, with AI we can significantly reduce that hole so that there is just a tiny trickle of water. And at the risk of mixing my metaphors, the present detect-and-respond logic is faulty because it is as though you are choosing between who is the best undertaker in removing the victims.

“By improving your prevention stance there is no ‘patient zero’. Organisations such as Cylance use AI and machine-learning to predict and prevent attacks before they have a chance to sink your boat. Therefore, I urge business leaders to rebalance their cybersecurity budget to reflect this change in improved defence, because it works. You still need to have detect and respond covered though; even with the AI, a minuscule amount of things still get through.”

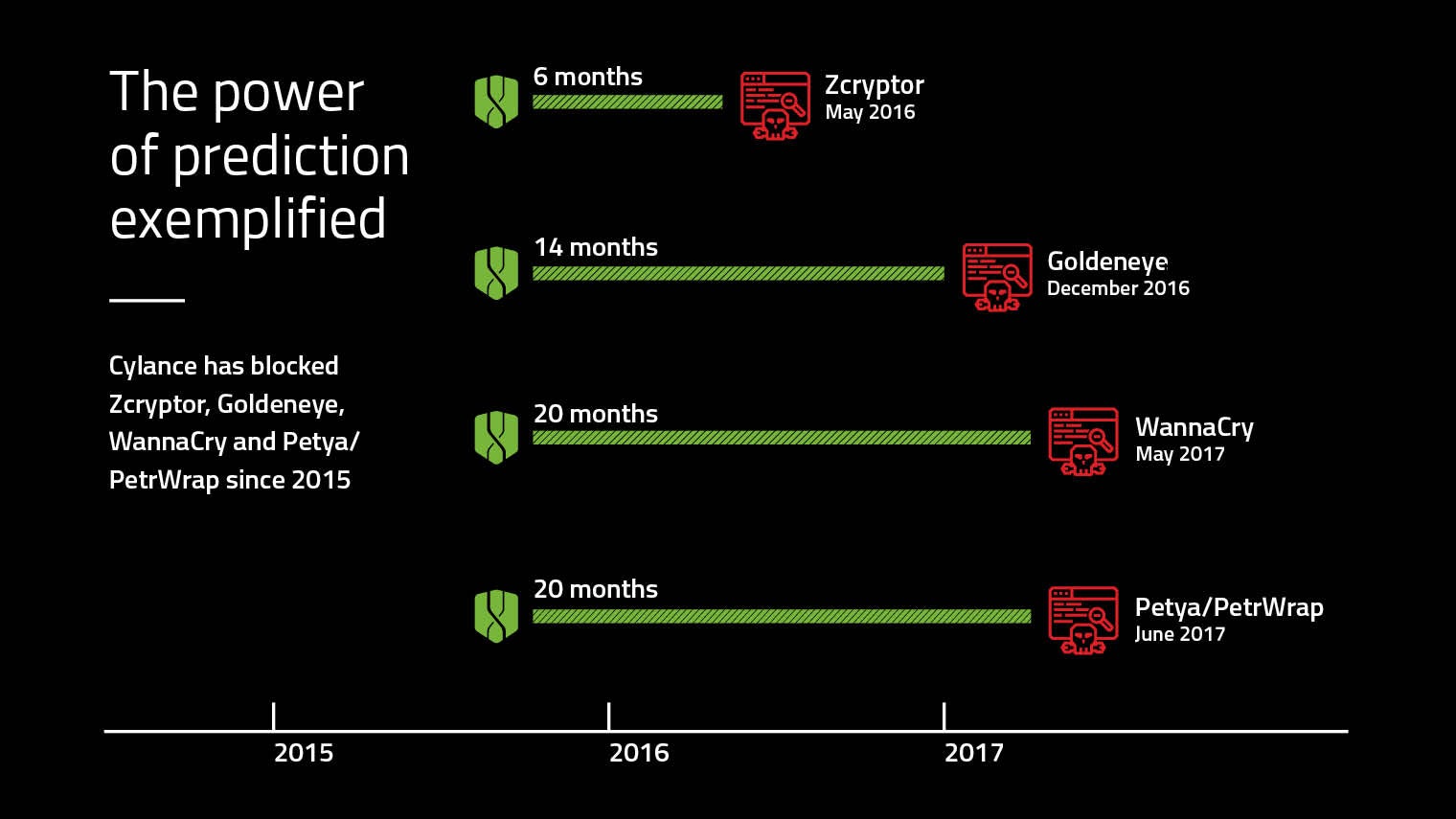

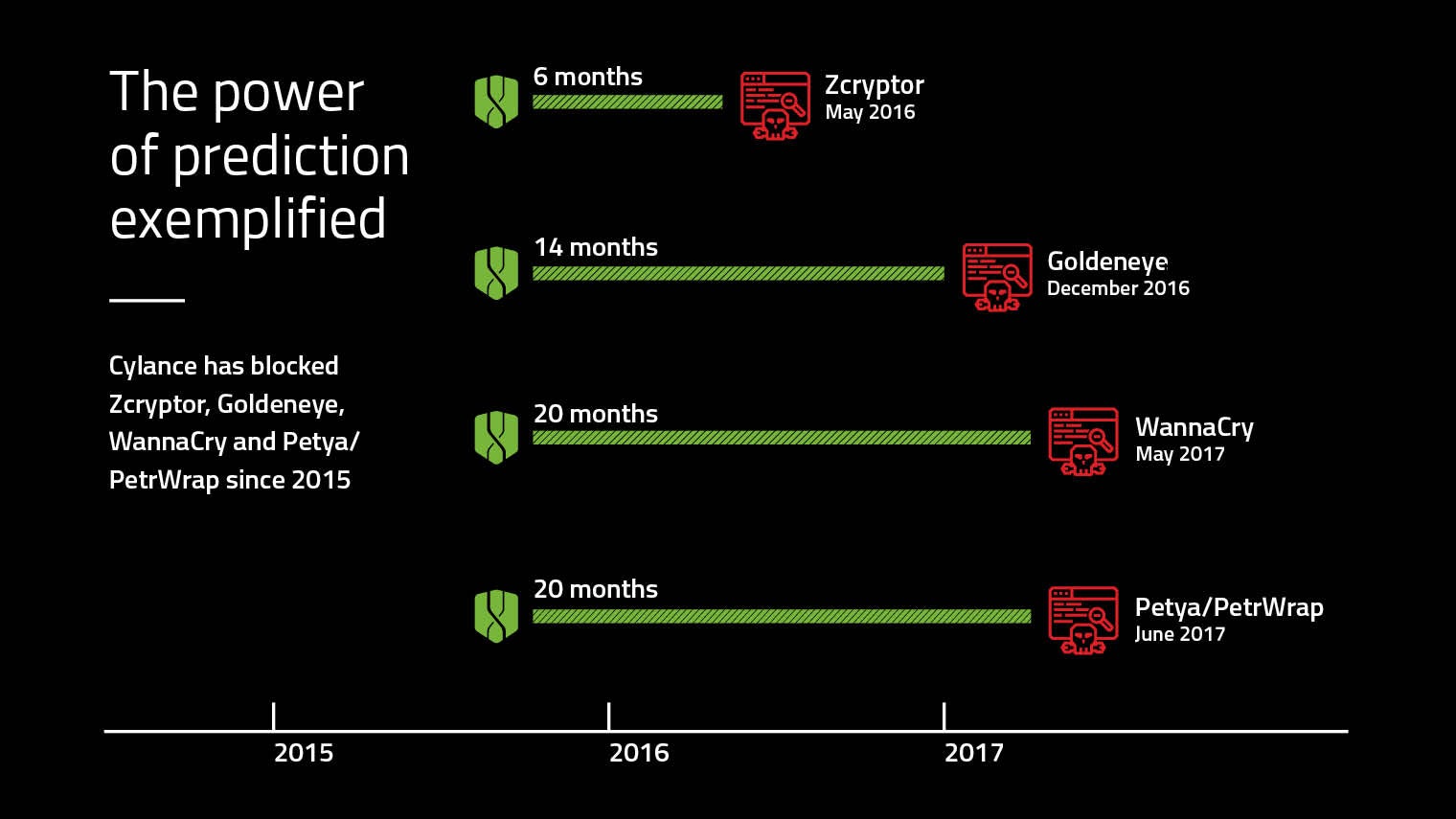

The California-headquartered software engineers Cylance, established in 2012, spent two years working on a product that could prevent many of headline cyberattacks in 2017. “We took petabytes of data, both good and bad, and trained our AI model to differentiate between these categories of executables,” explains Dr Grashion. “The power of this AI-based approach is that it doesn’t have to have even seen the specific malware to predict and prevent it.

“We stopped a wholly quadrillion of ransomware – WannaCry, Petya, PetrWrap, Goldeneye and ZCryptor – with a mathematics-led AI model from 2015, long before they were in existence. The model could not have known about them in theory, but it worked because of the power of prediction the AI model delivers. In layman’s terms, our model could predict these new, emerging threats based on factors and features they share with threats we already knew about. Those factors make these unknown files suspicious of being malware and stop them in their tracks.”

To highlight how complex and robust the end-point-deployed Cylance AI model is, Dr Grashion reveals there are some two million features. “One of them is based on the entropy of a file. And if you were to only use that feature it would be fairly good at identifying malware; combine it with the other features and you can gain a good idea of where the prevention accuracy comes from. Further, you don’t need to be connected to the internet for cloud lookups or to track behavioural changes; it still works perfectly if you are offline or on air-gapped critical infrastructure.”

With the introduction of the General Data Protection Regulation (GDPR) looming, there has been much fretting by business leaders about cybersecurity, given the numerous breaches that have compromised industry-leading organisations. Dr Grashion posits that if organisations alter their thinking – from detect and respond to prevention, using AI – then GDPR need not be anywhere near as threatening, in terms of both finance and reputation, as many fear.

“There is a lot of nonsense talked about AI and people have conflicted views about it,” he adds. “The likes of Elon Musk and Stephen Hawking might say that AI will lead to the end of the world, but what we are discussing in this instance is solely cybersecurity. And this application is quite simple: it determines whether or not an executable file is good or bad. The fact is the more advanced cyberattacks become, the more we will have to rely on AI – people alone don’t stand a chance.”

For more information please visit www.cylance.com