To what extent is artificial intelligence (AI) currently on the agenda of boards across the UK?

To what extent is artificial intelligence (AI) currently on the agenda of boards across the UK?

Some boards are really aware of it and some are behind where we think they need to be. It varies in terms of how relevant digital disruption has been to boards. Those who are aware of it tend to be in sectors that have been disrupted. For example, technology or media and entertainment sectors, where there has already been a high degree of change, or in highly regulated industries such as banking. Others are far less aware or, if they are aware, it’s more about its application in other areas. Most boards are not considering AI and the opportunities presented by it as it relates to improving risk management.

At board level, is AI currently viewed more as an opportunity or a risk?

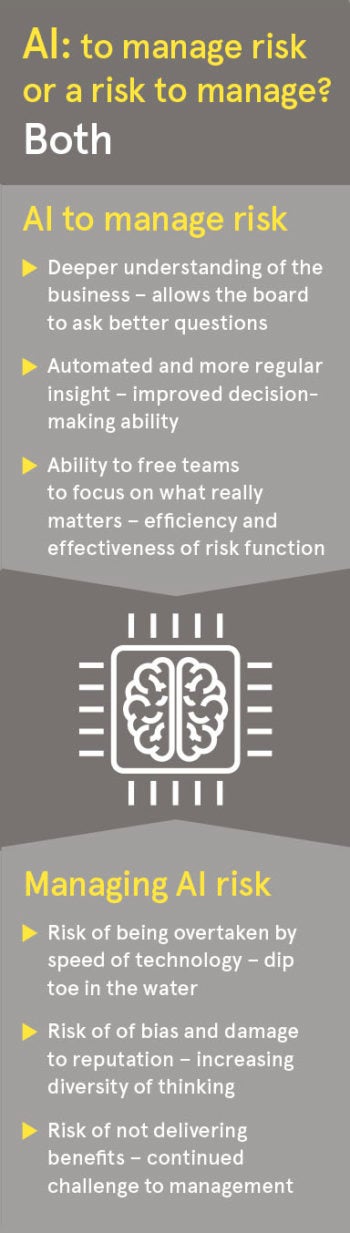

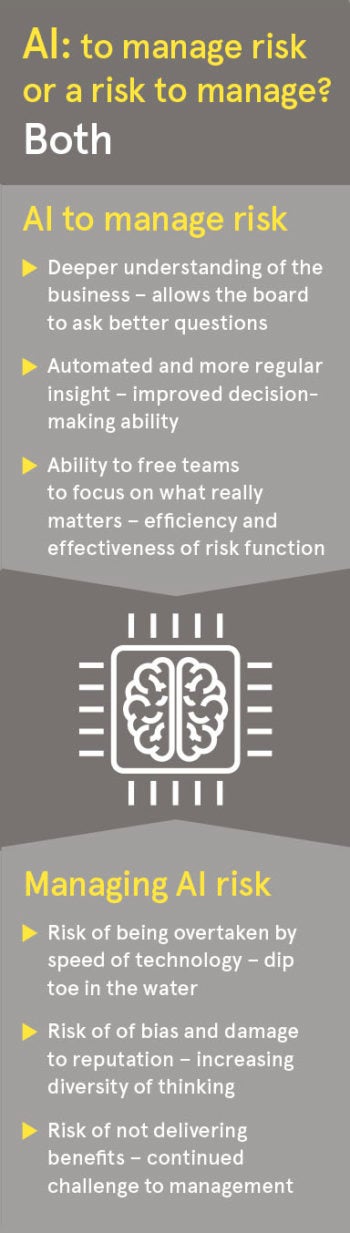

Generally more as an opportunity, but they tend to see it as an efficiency play rather than an opportunity to enhance risk management procedures and processes. Sometimes they are surprised by the increase in visibility it gives you and the consistency you get across a wider reach of your business. A combination of AI, automation and analytics enables you to drill risks up and down in a much more detailed way, and to get into far greater depth of issues at a sub-reporting or sub-business unit level than has ever been presented. We are seeing, for example, digital dashboards being put in front of boards that provide the ability to assess risks at a group level, drill down into subsidiary business units, geographies or components of the business and understand how those risks are presenting in detail. This gives boards a huge amount of richness and greater insight around the way risks are being managed, and the opportunities for the business to take on more risk. But not many boards have had that kind of digital dashboard put in front of them; it’s the exception rather than the rule.

What are the risks of ignoring AI or not giving it the attention it deserves at board level?

Boards need to navigate a path between thinking AI is the panacea for all ills and rushing into it without being aware of the risk. Even if AI might be further out in terms of priority or risk, the velocity at which it’s coming isn’t constant. If boards aren’t thinking about how they start to update skills and dip their toe in the water, by the time it’s actually upon them, it’s going to be too late to have built those skills. There’s a real risk around either leaping in head first or leaving it too late. Our recommendation is that all boards should be thinking about what they should be doing to get started. You can’t wait until it’s the number-one risk to your business before you start thinking about how you respond.

What ethical issues does AI create that directors should be aware of?

What ethical issues does AI create that directors should be aware of?

There’s a real concern that bias may get embedded into AI. Sometimes it may be developed by a group of people who have really good ideas, but find themselves operating in isolation, either as technical specialists or product developers. Boards needs to challenge management on how they bring diversity of thought into the process. As they’re going through and starting the AI journey, have they brought in the whole view of the organisation in terms of what it means for the people whose data is being utilised, and what are they doing in terms of what it might mean for their customers and reputation? It’s about thinking as broadly as possible around where this is eventually going to impact, rather than what it might be designed to do in the first instance. The best way of countering bias is making sure you have a diversity of people and thought in terms of what it means to the organisation.

What are the implications of that on the board’s role in the business?

We see a potential emerging issue that if you have a fully automated risk environment, it takes out the professional scepticism boards still need to have. You may be able to define a risk appetite and monitor that effectively using some quite sophisticated skills, but boards still need to exercise challenge to management. They still need to trust their stomach and look for the impact of longer-term trends and exercise judgment in terms of holding management to account. That is the principal role of a non-executive board. Technology can enable this, but you shouldn’t be blinded by the data or technology such that it prevents you exercising professional scepticism.

What is the future of AI in risk management, and what role will EY play in helping boards understand its importance and impact?

We are really optimistic for the future of AI to accelerate the benefits of effective risk management. We think it will empower boards, provide visibility to risks and opportunities, and give boards a far wider and consistent set of data points to make judgments. Our overall starting point is incredibly optimistic around what can be done. Our role at EY is firstly to bring emerging tools and combinations of technologies into the boardroom, and demonstrating the art of the possible.

We can help companies stand up more effective risk management, either as a standalone service offering or as a managed service. EY is uniquely placed in terms of risk management and commercial acumen to make tools relevant to boards, as well as the right governance and regulatory levels that boards need to operate under.

Rob Walker is an EY partner and the UKI risk leader. He is also a member of the content steering group for the EY UK Centre for Board Matters, a programme delivering insight, thought-provoking discussions and facilitating connections for non-executive directors. For more information please visit www.ey.com/uk/boardmatters or email [email protected]