We live in a world where humans aren’t the only ones that have rights. In the eyes of the law, artificial entities have a legal persona too. Corporations, partnerships or nation states also have the same rights and responsibility as human beings. With rapidly evolving technologies, is it time our legal system considered a similar status for artificial intelligence (AI) and robots?

“AI is already impacting most aspects of our lives. Given its pervasiveness, how this technology is developed is raising profound legal and ethical questions that need to be addressed,” says Julian David, chief executive of industry body techUK.

Take Facebook, Amazon or IBM, they are all legal entities, with similar privileges as citizens, with the right to defend themselves in court and the right to free speech. If IBM has a legal personhood, is it possible that Watson, the company’s AI engine, Google’s complex algorithm or Amazon’s Alexa might also qualify for a new status in law, with new responsibilities and rights too?

To be worthy of people’s trust, greater clarity around the status of AI will certainly be important

“The idea isn’t as ridiculous as it initially appears. It’s sometimes a problem that AI is regulated according to rules that were developed centuries ago to regulate the behaviour of people,” says Ryan Abbott, professor of law at the University of Surrey.

“One of the biggest and legally disruptive challenges posed by AI is what to do with machines that act in ways that are increasingly autonomous.”

Justice for robots

This burning issue drove the European Parliament to act two years ago. It considered creating a new legal status – electronic personhood – with a view to making AI and robots so-called e-persons with responsibilities. Their reasoning was that AI, an algorithm or a robot could then be held responsible if things went wrong, like a company. In response, 156 AI specialists from 14 nations denounced the move in a group letter.

“It makes no sense to make a piece of computer code responsible for its outputs, since it has no understanding of anything that it’s done. Humans are responsible for computer output,” says Noel Sharkey, emeritus professor of AI and robotics at the University of Sheffield, who signed the missive. “This could allow companies to slime out of their responsibilities to consumers and possible victims.”

Certainly, making AI a legal entity would create a cascade of effects across all areas of law. Yet the idea behind the EU e-person status was less about giving human rights to robots, but more about making sure AI will remain a machine with human backing, which is then accountable in law.

“To be worthy of people’s trust, greater clarity around the status of AI will certainly be important,” argues Josh Cowls, research associate in data ethics at the Alan Turing Institute. “But by carving AI out from the very human decisions about why it works the way it does and giving it a quasi-mythical status as a separate entity, we risk losing the ability to ask questions of the people and companies who design and deploy it.”

Regulation lagging behind innovation

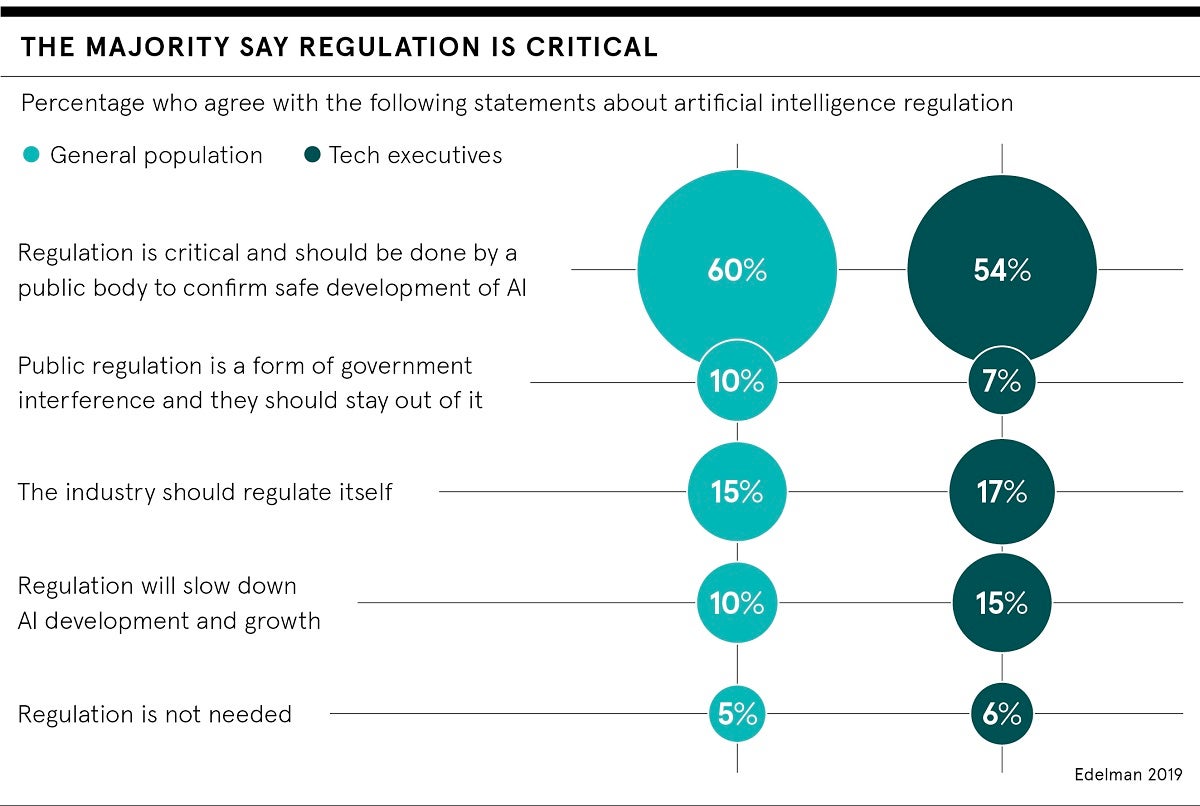

Incoming president of the European Commission, Ursula von der Leyen, has pledged to regulate AI in her first 100 days of office. There’s also a growing international effort; in the past few years 84 groups around the world have suggested ethical principles for AI, according to researchers at ETH Zurich. In the UK, the Office for AI, the Centre for Data Ethics and Innovation, as well as the Information Commissioner’s Office, are all focused on these issues, yet these are still early days.

“I’m sceptical about the possibility of a position on AI in the legal framework,” says Matt Hervey, head of AI at Gowling WLG. “We cannot even agree on a definition, let alone its place in law. AI covers a range of tools being used in a growing number of applications. But given the potential disruptive impact of AI, legislators are right to consider whether new laws are justified.

“Yet laws and regulation tend to lag significantly behind technological change. It took over a decade for our copyright law to catch up with the video recorder and the same again for the iPod. Lawmakers cannot predict what tech companies will produce and these firms often fail to predict how the public will use the technology.”

Who is responsible for AI’s decisions?

Worryingly, none of the current AI ethical codes that the Open Data Institute analysed carry legal backing, or forms of recourse, or penalties for breaking them. To date there are few legal provisions for AI. It’s only a matter of time before tighter regulation comes into force, especially if the general public is to trust AI’s meteoric rise and use by technologists.

“This is unlike other professions such as medicine or law where people can be banned from practising the profession if they break an ethical code,” explains Peter Wells, the Open Data Institute’s head of public policy.

So, if an algorithm makes an autonomous decision, who’s responsible? Experts agree that a clear chain of human accountability is crucial. The EU’s General Data Protection Regulation helps in this regard, but with so much money and intellectual property at stake now and in the future, the law may need to do more.

“We are seeing a massive commercialisation of ethics with many companies setting up token ethics boards that do not penetrate into the core issues of their business. This kind of ethics-washing is designed to hinder new regulations,” exclaims Professor Sharkey.

Justice for robots