The phrase “loose lips might sink ships” warned, on American wartime posters, of unguarded conversations during the Second World War. Now, 73 years on from Victory over Japan Day on September 2, 1945, the formal conclusion of that horrific epoch, a similarly sloppy attitude to cybersecurity in the workplace can torpedo organisations.

A well-cast “phish” – a fraudulent attempt to hook sensitive data such as usernames, passwords and codes – is capable of inflicting fatal financial and repetitional damage. “Successful phishing attacks can sink companies as well as individuals,” says Juliette Rizkallah, chief marketing officer at identity software organisation SailPoint, updating the famous idiom.

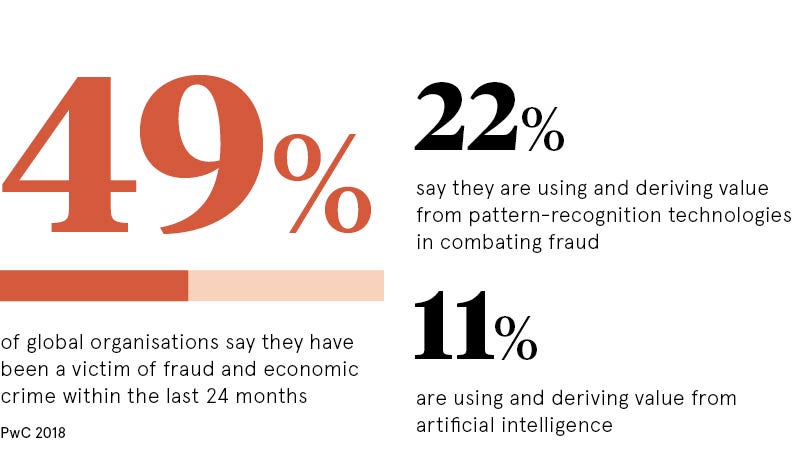

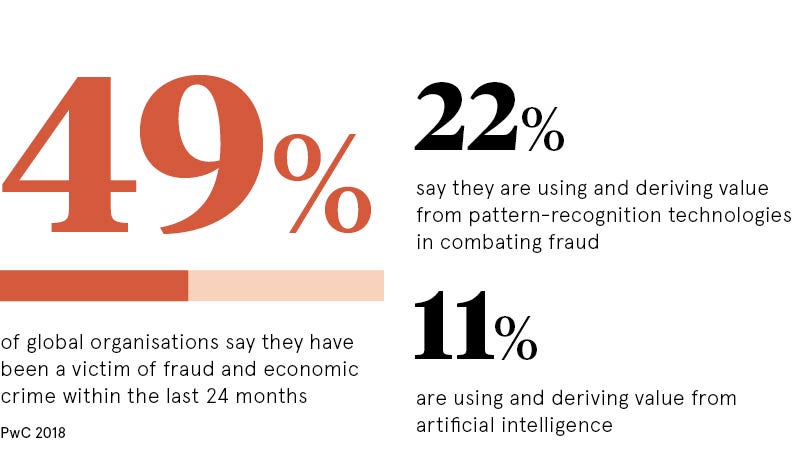

Fraud is endemic in the digital age. The UK loses over £190 billion per year to fraud – more than the government spends on health and defence – according to credit service agency Experian. Organisations of all sizes are failing to keep pace with threats, mostly because of poor human cyber-hygiene. Little wonder hordes of business leaders are turning to artificial intelligence (AI) to fight fraud.

Artificial intelligence can be used both by and against criminals

So how can autonomous technology help? “Artificial intelligence is befitting fighting fraud because it picks up on patterns and irregularities that humans can’t naturally perceive,” says Stuart Aston, national security officer of Microsoft UK. He points out his organisation’s Azure Machine Learning is enabling Callcredit, one of the UK’s largest credit reference agencies, to identify criminals who pretend to be someone else when trying to access credit reports and borrow money.

“There are a number of promising innovations, including the ability to look at rich data previously excluded from fraud detection, such as photographs, video and translated audio, that are revolutionising fraud prevention, and with AI tools these tasks can be conducted faster, more efficiently and more precisely than before,” says Mr Aston.

A growing cluster of similar AI-powered fraud prevention software applications are now on the market, such as iovation, pipl and Zonos, though criminals are using the same capabilities in nefarious ways.

“Machine-learning will be essential in developing faster, more intuitive artificial intelligence, but the flip side to that is hackers can deploy machine-learning too,” says Richard Lush, head of cyber-operational security at CGI UK. “An example of this is Deeplocker, which can use AI to hide malware. That’s why it’s really important to dovetail technology with human operators who can bring a level of empathy and intuition that artificial intelligence currently cannot.”

Artificial intelligence will still need human input to fight fraud

Luke Vile, cybersecurity operations director at 2-sec, concurs that adopting artificial intelligence to combat fraudulent attacks is becoming essential. “AI can help to flag fraudulent activity extremely quickly, often within seconds, so that possible crime can be stopped or spotted immediately,” he says. “AI can also be tuned to only alert organisations to fraud rather than ‘possible fraud’, meaning security teams are not spending too much time on activity that is safe, but unusual.”

Martin Balek, machine-learning research director at internet security giant Avast, expands this theme. “AI hasn’t just improved defences, it has remodelled security with its ability to detect threats in real time and accurately predict emerging threats,” he says. “This is a giant leap forward for the industry. Before AI, this sort of task would require monumental resource for humans to perform alone.”

While it is likely that before long we will reach the point where a fully automated, AI-based security system will be effective enough to eliminate a high percentage of fraud without requiring any human input – indeed, Feedzai recently announced it is bringing automated learning to the fraud space, claiming this to be an industry first – many within advise that sign-off is not handed solely to a machine.

“Organisations will always want a final, human pair of eyes to make sure that obvious errors aren’t being caused,” says Mr Vile.

Using AI to fight fraud requires greater levels of transparency

And Mr Lush notes: “Google Cloud are bringing more humans on board to work in their fraud detection operations to safeguard their related customer service offer, so it’s too early to tell if fraud prevention will be fully automated, resulting in the total of exclusion of humans.”

Mr Balek adds: “Full automation also has implications under the general data protection regulation (GDPR). Bearing in mind that such technology could constrain the ability of consumers to use their own funds, or to achieve desired outcomes, a company seeking to implement full artificial intelligence would need to display a high level of transparency about its operations.

“Article 13 of the GDPR is clear that the existence of automated decision-making, meaningful information about the logic involved, and the significance and envisaged consequences of such processing, must be published to individuals whose personal data is to be subjected to this processing.”

The real key to fighting fraud is having many layers of security

Limor Kessem, executive security adviser at IBM Security, believes multi-verification points, plus the introduction of effective biometric authentication, again using artificial intelligence, and removing memorable passwords altogether will bolster digital defences.

AI is sure to become a key part of the way organisations prevent fraud

“Consumer attitudes and preferences will lead the way in reducing password use and layering security controls to put more hurdles in an attacker’s way,” she says. “On its own, there is not one method that could be considered ‘unhackable’, but layering more than one element can definitely turn hacking an account into a costly endeavour for a criminal.

“In the longer term, AI is sure to become a key part of the way organisations prevent fraud. We need to change the way we manage fraud and face attackers with adaptive technologies that reason like humans do. Over time, these technologies will likely keep reducing fraud rates until a breaking point where the criminal’s return on investment will no longer be lucrative enough.”

Artificial intelligence can be used both by and against criminals