The role of artificial intelligence or AI in business has progressed from initial sci-fi notions of movie robots and talking doors. In a world where human-machine interface technologies are evolving at quantum speed and one where talking doors are very much a reality, the more imperfect and almost human the next generation of AI can be, the more “perfect” it becomes.

We can now use emerging AI tools to deduce whether social media outputs – tweets, Flickr images, Instagram posts and more – are being generated by so-called software bots programmed by malicious hackers or whether they indeed are being made by genuine humans. The central notion here is that computers are still slightly too perfect when they perform any task that mimics human behaviour.

Even when programmed to incorporate common misspellings and the idioms of local language, AI is still too flawless. Humans are more interactive, more colloquial, more context-aware and often altogether more imperfectly entertaining. Programming in sarcasm, humour and the traits of real human personality are still a big ask, it seems.

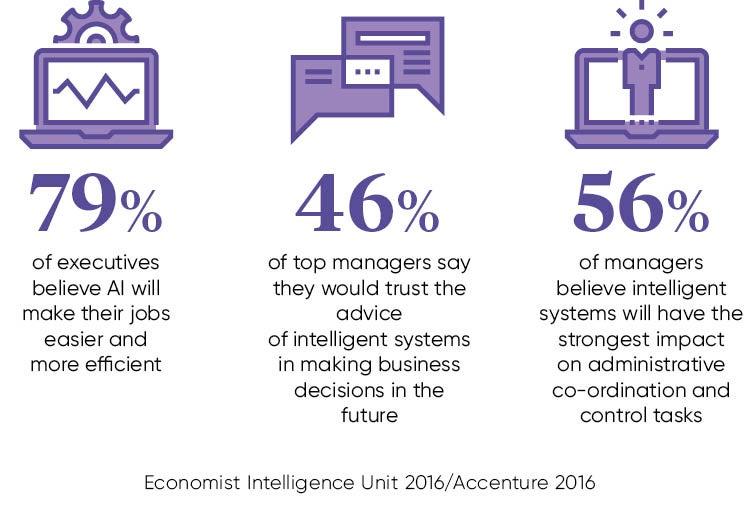

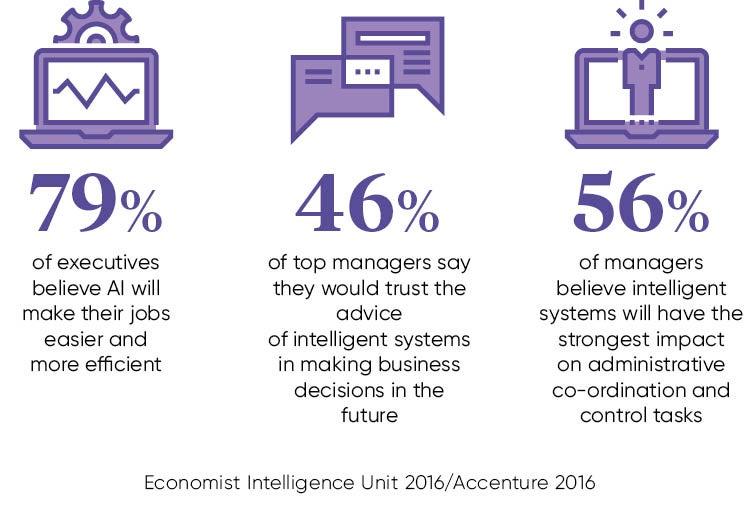

“Medical advancements such as robots taking over dangerous jobs and the automation of mundane tasks are some of the key benefits that AI can bring to people in all walks of life,” says Martin Moran, international managing director at InsideSales, a company that specialises in a self-learning engine for sales acceleration. Mr Moran points to engineering, administration and customer service as three areas set for AI growth.

“Essentially, it is the admin-heavy departments that stand to benefit most from AI today. We have taken AI out of the movies and reached the tangible 2.0 generation of cognitive intelligence,” he says.

Human-like AI

“The next phase of AI will mimic humans more closely and be built on the back of massive processing power, access to vast amounts data and hugely complex algorithmic logic, just like our own brains. Equally, the true and lasting impact on business will only happen if this AI intelligence is deeply embedded in the workflow process itself.”

The point at which we can interplay the nuances of natural language understanding with human behavioural trends in their appropriate contextual environment takes us to a higher level of AI machine control. Being able to build AI with the idiomatic peculiarities of real people could allow us to use AI in real business workshops, factories and offices. So how do we build machines to be more like us?

Operational intelligence company Splunk says the answer to perfectly imperfecting AI is there in the machines, not in any study of humans in the first instance. “The bedrock of machine-learning is in the insights that can be found through the analysis of humans interacting with machines by the residue data left on those machines, says Guillaume Ayme, IT operations evangelist at Splunk.

The next phase of AI will mimic humans more closely and be built on the back of massive processing power, access to vast amounts data and hugely complex algorithmic logic

“Every human action with a machine leaves a trace of ‘machine data’. Harnessing this data gives us a categorical record of our exact human behaviour, from our activity on an online store to who we communicate with or where we travel through the geolocation settings on a device.”

Mr Ayme points out that most of this kind of data is only partially captured by the majority of organisations and some of it is not tracked at all. When we start to digitise and track the world around us to a more granular level, then we can start to build more human-like AI that has a closer appreciation of our behaviour.

The point AI needs to get to next is one where it fits more naturally into what we might call the narrative of human interaction. AI intelligence needs to be intrinsically embedded in the fabric of the way firms operate. Only then can the AI brain start to learn about the imperfect world around it. Humans need to get used to a future where we have to interact with and work alongside computer brains on a daily basis.

Language and emotion

A new report by the Project Literacy campaign, overseen and convened by learning services company Pearson, predicts that at the current rate of technological progress, devices and machines powered by AI and voice recognition software will surpass the literacy level of more than one in twenty British adults within the next ten years.

“Machine-reading is not close to mastering the full nuances of human language and intelligence, despite this idea capturing the imagination of popular culture in movies. However, advances in technology mean that it is likely ‘machines’ will achieve literacy abilities exceeding those of 16 per cent of British people within the next decade,” says Professor Brendan O’Connor of the University of Massachusetts Amherst.

What happens next with AI is emotional. That is to say AI will start to be able to understand, classify and then act upon human emotions. Initially, this work has been straightforward enough. An image of a person showing their teeth is probably smiling and happy. A person has furrowed eyebrows might be angry or frustrated and so on. Latterly, we have started to add extra contextual information about what the user might be doing or where they might be located, then a more accurate picture of mood and emotions is built up.

Natural language interaction company Artificial Solutions says it is working on the next generation of AI cognizance. By creating a world of computer “conversations” that are a world away from what we might consider to be textbook English, Artificial Solutions is working to create AI that understands human bad habits and can appreciate our unpredictable colloquial nuances. AI today is just at the tipping point of becoming emotionally sensitive, believably naturalistic and humanly imperfect. Be nice to your computer, it’s just about to get closer to you.

Human-like AI