There has been a lot written about how artificial intelligence or AI, usually in the guise of machine-learning, is changing the way we defend networks and data from criminal endeavour. But what if we flip this around; what can AI do for the bad guys?

Forget the rise of the robots enslaving humanity, a far more likely science factual future sees criminal gangs in possession of AI applications.

What kind of crimes could AI drive?

What kind of crimes could AI drive?

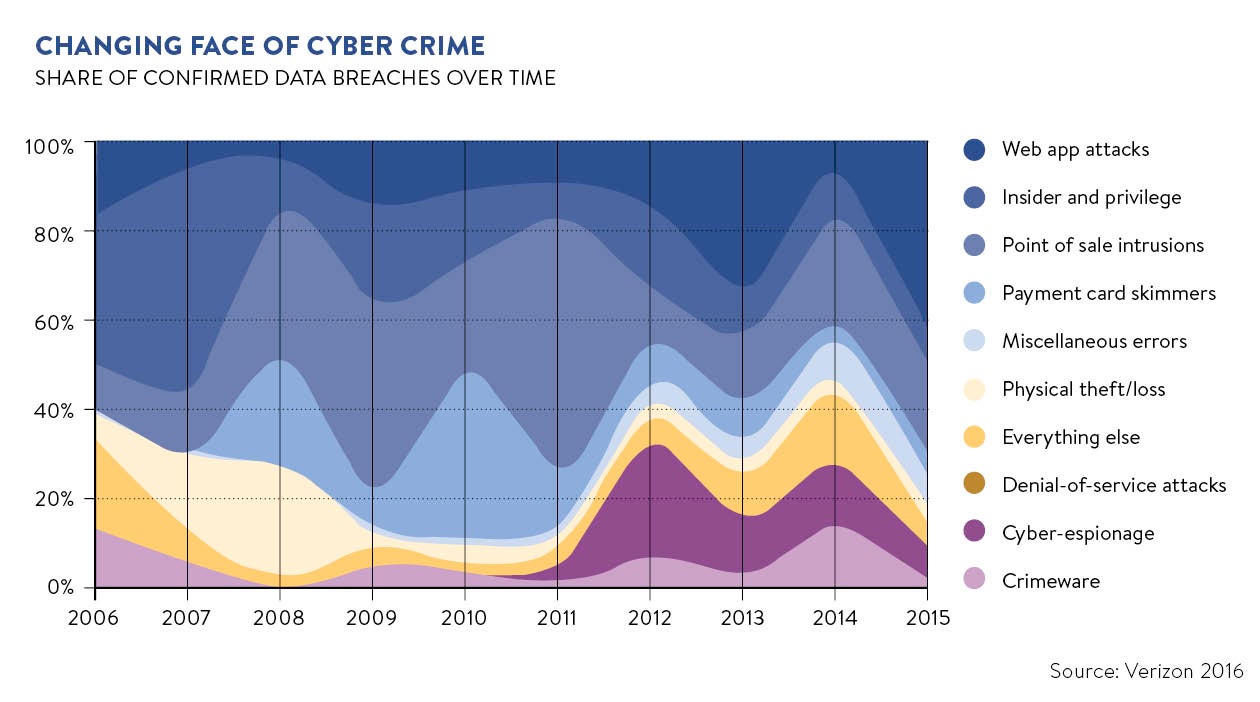

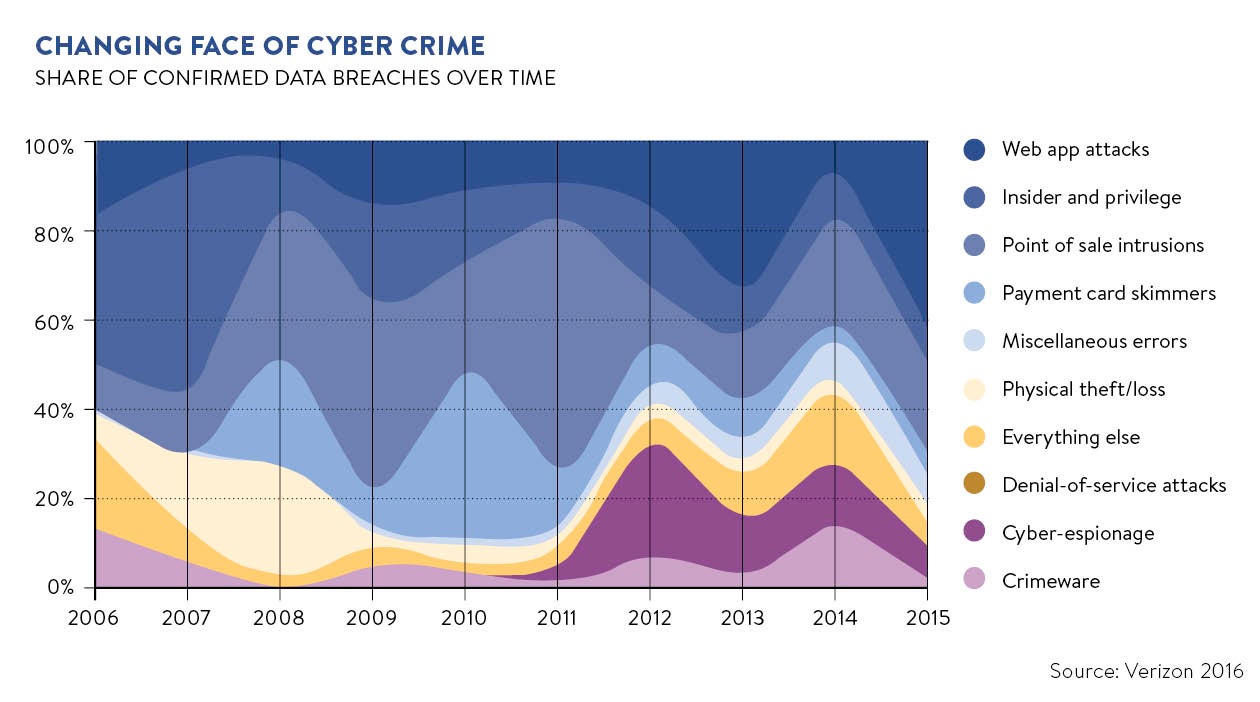

To answer this question, we should first look at where the criminal fraternity is now, as far as shifting the cyber-crime model is concerned. Certainly, over the last few years there has been a move away from transitional online “smash-and-grab” crimes to more sophisticated and structured “as-a-service” models.

These leverage commercially available applications such as predictive data analytics to identify future trends from past attacks, optimal timings and even victim profiling to determine the most likely to yield the best attack exposure.

“AI can provide cyber criminals with data-driven models for crime ‘return on investment’ much as retailers can predict consumer spending patterns,” warns Peter Tran, general manager of the Worldwide Advanced Cyber Defence Practice at security consultancy RSA. “As-a-service models are quite profitable over the dark web.” All of which is unsurprising as they enable criminals with little technical skill to purchase ready-to-hack toolkits once reserved for the elite hacking underworld.

Not that the criminal underground is short of elite hackers or PhD scientists and maths wizards for that matter. Together they are increasingly employing AI-powered tools to expose weaknesses in corporate defences.

“It is relatively easy for AI-powered tools to learn how to fake biometric data,” says Scott Zoldi, chief analytics officer with behaviour prediction specialists FICO. “This is done by layering noise on top of an image to cause recognition systems to misclassify it. Criminals are developing subtle ways of attacking an organisation that are nearly unperceivable to humans and that can trick the machines we increasingly depend upon to protect us.”

AI can provide cyber criminals with data-driven models for crime ‘return on investment’ much as retailers can predict consumer spending patterns

Given the growth in intelligent voice services such as Amazon Echo, Apple Siri and OK Google, it seems likely that voice could also become vulnerable to AI-driven crime, especially as banks move to voice security systems.

“I can foresee this being so vulnerable,” says Tracey Follows, chief strategy and innovation officer from The Future Laboratory, “because it is such a natural human behaviour. Once machines can learn and mimic unique voices they can carry out crimes under a cloak of trust.”

We should also expect a shift in the criminal business model to start affecting strategic business decisions, instead of just stealing data. What if hacktivists could have smart malware hidden in geophysical survey databases which change underlying data, so that the multi-million-pound drilling rights are bought in the wrong places with oil rigs coming up dry?

“Even if you think the survey database is too well protected,” says Dave Palmer, director of technology at machine-learning security specialist Darktrace, “you could attack the ocean sensors that are collecting the data in the first place, to ensure you are still able to influence the decisions right from the start of the information supply chain.”

Preventing cyber crime

So, what can the programmers of AI applications do to prevent it being used with criminal intent? It’s reassuring to think that Asimov’s three laws of robotics might apply. These dictate that a robot may not injure a human or allow a human to come to harm through inaction; must obey the orders a human gives it unless they conflict with the first law; and must protect itself unless that conflicts with the first or second law.

But is this actually possible? Javvad Malik, an advocate at security vendor AlienVault, likes to think there might be specific use-cases where ethics, parameters or safeguards can be built in. However, as things stand now and in the foreseeable future, Mr Malik sees AI as a knife-like tool. “The knife will cut regardless of whose hand it is in,” he says, “and whatever object it is slicing, be that bread or another person.”

Even if it were possible to embed some kind of ethical safety valve into AI code, Ms Follows points out, whose ethics would it be? “Is it Google’s ethics we are programing into the system or Amazon’s?” she asks. “It seems likely that those who are used to executing and legislating our moral codes will not be the ones in control of the AI.”

So, will ethics be embedded by commercial enterprises and, in that case, what might be their agenda? This is why governments need to get on the front foot with this and it is a good sign that, in the United States, the White House published their Future of Artificial Intelligence initiatives earlier this year. It’s an area in which the UK currently lags, although MPs on the House of Commons Science and Technology Committee have called for a commission to provide leadership on the legal and ethical implications of AI.

As Bertrand Liard, partner at global law firm White & Case, advises: “The allocation of risk and liability for AI is an enormous decision. While companies are quick to claim ownership of data and discoveries made by such devices, they will undoubtedly want to limit their liability in the event of something going wrong.”

Mr Liard even suggests that maybe a codicil to Asimov’s laws is required. “Every act of AI that causes damage to AI, man or humanity obliges him by whose fault it occurred to repair it,” he says.

One thing we can say with certainty is that both public and commercial enterprises need to prepare for cyber crime rapidly pivoting to AI-driven micro-breaches focused on data manipulation and disruption rather than exfiltration alone.

“Think global financial market manipulation, critical infrastructure disruption and long-term corporate espionage,” says RSA’s Mr Tran.

Think Minority Report, the movie based on Philip K. Dick’s story of a future where people are arrested and convicted before a crime is committed. Ms Follows concludes: “The only way to deal with AI crime will be to forecast it in order to fight it. An industry will spring up that is about tracking behaviours, pattern recognition and anomaly.

What kind of crimes could AI drive?

What kind of crimes could AI drive?